Agilicious:Open-source and open-hardware agile quadrotor for vision-based flight

Abstract

Autonomous, agile quadrotor flight raises fundamental challenges for robotics research in terms of perception, planning, learning, and control. A versatile and standardized platform is needed to accelerate research and let practitioners focus on the core problems. To this end, we present Agilicious, a codesigned hardware and software framework tailored to autonomous, agile quadrotor flight. It is completely open source and open hardware and supports both model-based and neural network–based controllers. Also, it provides high thrust-to-weight and torque-to-inertia ratios for agility, onboard vision sensors, graphics processing unit (GPU)–accelerated compute hardware for real-time perception and neural network inference, a real-time flight controller, and a versatile software stack. In contrast to existing frameworks, Agilicious offers a unique combination of flexible software stack and high-performance hardware. We compare Agilicious with prior works and demonstrate it on different agile tasks, using both model-based and neural network–based controllers. Our demonstrators include trajectory tracking at up to 5g and 70 kilometers per hour in a motion capture system, and vision-based acrobatic flight and obstacle avoidance in both structured and unstructured environments using solely onboard perception. Last, we demonstrate its use for hardware-in-the-loop simulation in virtual reality environments. Because of its versatility, we believe that Agilicious supports the next generation of scientific and industrial quadrotor research.

INTRODUCTION

Quadrotors are extremely agile vehicles. Exploiting their agility in combination with full autonomy is crucial for time-critical missions, such as search and rescue, aerial delivery, and even flying cars. For this reason, over the last decade, research on autonomous, agile quadrotor flight has continually pushed platforms to higher speeds and agility (1–10).

To further advance the field, several competitions have been organized—such as the autonomous drone racing series at recent IROS (International Conference on Intelligent Robots and Systems) and NeurIPS conferences (3, 11–13) and the AlphaPilot challenge (6, 14)—with the goal to develop autonomous systems that will eventually outperform expert human pilots. Million-dollar projects, such as AgileFlight (15) and Fast Lightweight Autonomy (FLA) (4), have also been funded by the European Research Council and the U.S. government, respectively, to further push research. Agile flight comes with ever-increasing engineering challenges because performing faster maneuvers with an autonomous system requires more capable algorithms, specialized hardware, and proficiency in system integration. As a result, only a small number of research groups have undertaken the notable overhead of hardware and software engineering and have developed the expertise and resources to design quadrotor platforms that fulfill the requirements on weight, sensing, and computational budget necessary for autonomous agile flight. This work aims to bridge this gap through an open-source agile flight platform, enabling everyone to work on agile autonomy with minimal engineering overhead.

The platforms and software stacks developed by research groups (2, 4, 16–21) vary strongly in their choice of hardware and software tools. This is expected, because optimizing a robot with respect to different tasks based on individual experience in a closed-source research environment leads to a fragmentation of the research community. For example, although many research groups use the Robot Operating System (ROS) middleware to accelerate development, publications are often difficult to reproduce or verify because they build on a plethora of previous implementations of the authoring research group. In the worst case, building on an imperfect or even faulty closed-source foundation can lead to wrong or nonreproducible conclusions, slowing down research progress. To break this cycle and to democratize research on fast autonomous flight, the robotics community needs an open-source and open-hardware quadrotor platform that provides the versatility and performance needed for a wide range of agile flight tasks. Such an open and agile platform does not yet exist, which is why we present Agilicious, an open-source and open-hardware agile quadrotor flight stack (https://agilicious.dev) summarized in Fig. 1.

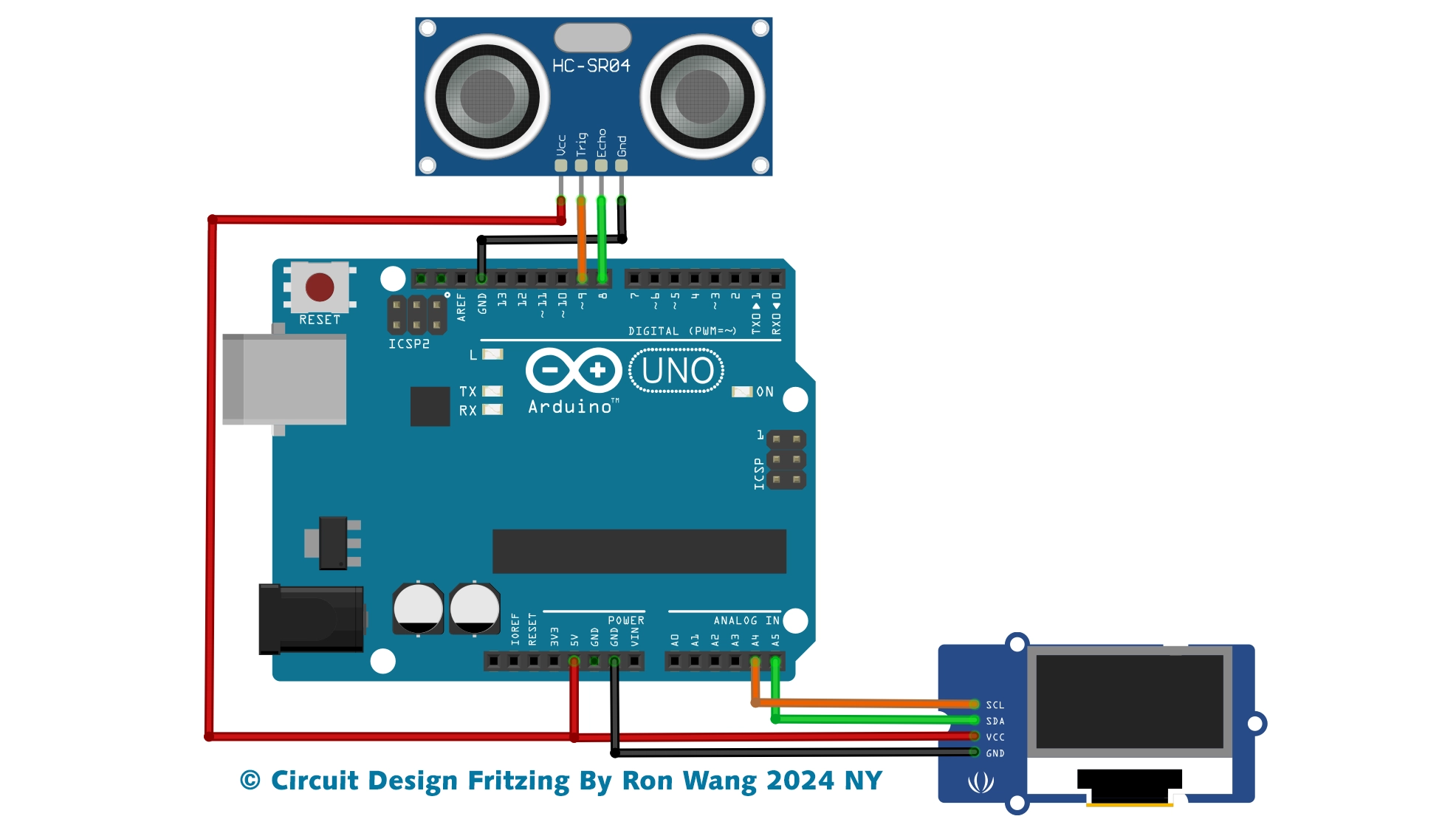

Fig. 1. The Agilicious software and hardware quadrotor platform are tailored for agile flight while featuring powerful onboard compute capabilities through an NVIDIA Jetson TX2.

The versatile sensor mount allows for rapid prototyping with a wide set of monocular or stereo camera sensors. As a key feature, the software of Agilicious is built in a modular fashion, allowing rapid software prototyping in simulation and seamless transition to real-world experiments. The Agilicious Pilot encapsulates all logic required for agile flight while exposing a rich set of interfaces to the user, from high-level pose commands to direct motor commands. The software stack can be used in conjunction with a custom modular simulator that supports highly accurate aerodynamics based on BEM (40) or with RotorS (60), hardware-in-the-loop, and rendering engines such as Flightmare (44). Deployment on the physical platform only requires selecting a different bridge and a sensor-compatible estimator.

To reach the goal of creating an agile, autonomous, and versatile quadrotor research platform, two main design requirements must be met by the quadrotor: It must carry the required compute hardware needed for autonomous operation, and it must be capable of agile flight.

To meet the first requirement on computing resources needed for true autonomy, a quadrotor should carry sufficient compute capability to concurrently run estimation, planning, and control algorithms onboard. With the emergence of learning-based methods, efficient hardware acceleration for neural network inference is also required. To enable agile flight, the platform must deliver an adequate thrust-to-weight ratio (TWR) and torque-to-inertia ratio (TIR). The TWR can often be enhanced using more powerful motors, which in turn require larger propellers and thus a larger size of the platform. However, the TIR typically decreases with higher weight and size, because the moment of inertia increases quadratic with the size and linearly with the weight. As a result, it is desirable to design a lightweight and small platform (22, 23) to maximize agility (i.e., maximize both TWR and TIR). Therefore, the platform should meet the best trade-off, because maximizing compute resources competes against maximizing the flight performance.

Apart from hardware design considerations, a quadrotor research platform needs to provide the software framework for flexible usage and reproducible research. This entails the abstraction of hardware interfaces and a general codesign of software and hardware necessary to exploit the platform’s full potential. Such codesign must account for the capabilities and limitations of each system component, such as the complementary real-time capabilities of common operating systems and embedded systems, communication latencies and bandwidths, system dynamics bandwidth limitations, and efficient usage of hardware accelerators. In addition to optimally using hardware resources, the software should be built in a modular fashion to enable rapid prototyping through a simple exchange of components, in both simulation and real-world applications. This modularity enables researchers to test and experiment with their research code, without the requirement to develop an entire flight stack, accelerating time to development and facilitating reproducibility of results. Last, the software stack should run on a broad set of computing boards and be efficient and easy to transfer and adapt by having minimal dependencies and provide known interfaces, such as the widely used ROS.

The complex set of constraints and design objectives is difficult to meet. There exists a variety of previously published open-source research platforms, which, although well designed for low-agility tasks, could only satisfy a subset of the aforementioned hardware and software constraints. In the following section, we list and analyze prominent examples such as the FLA platform (4), the MRS quadrotor (20), the ASL-Flight (17), the MIT-Quad (24), the GRASP-Quad (2), or our previous work (18).

The FLA platform (4) relies on many sensors, including Lidars and laser-range finders in conjunction with a powerful onboard computer. Although this platform can easily meet autonomous flight computation and sensing requirements, it does not allow agile flight beyond 2.4 g of thrust, limiting the flight envelope to near-hover flight. The MRS platform (20) provides an accompanying software stack and features a variety of sensors. Although this hardware and software solution allows fully autonomous flight, the actuation renders the system not agile with a maximum thrust-to-weight of 2.5. The ASL-Flight (17) is built on the DJI Matrice 100 platform and features an Intel NUC as the main compute resource. Similarly to the MRS platform, the ASL-Flight has very limited agility due to its weight being on the edge of the platform’s takeoff capability. The comparably smaller GRASP-Quad proposed in (2) operates with only onboard inertial measurement unit (IMU) and monocular camera while having a weight of only 250 g. Nevertheless, the Qualcomm Snapdragon board installed on this platform lacks computational power, and also, the actuation constrains the maximal accelerations below 1.5g. Motivated by drone racing, the MIT-Quad (24) reported accelerations of up to 2.1g, whereas it was further equipped with NVIDIA Jetson TX2 in (25); however, it does not reach the agility of Agilicious and contains proprietary electronics. Last, the quadrotor proposed in (18) is a research platform designed explicitly for agile flight. Although the quadrotor featured a high thrust-to-weight ratio of up to 4, its compute resources are very limited, prohibiting truly autonomous operation. All these platforms are optimized for either relatively heavy sensor setups or agile flight in nonautonomous settings. Whereas the former platforms lack the required actuation power to push the state of the art in autonomous agile flight, the latter have insufficient compute resources to achieve true autonomy.

Last, several mentioned platforms rely on Pixhawk-PX4 (26), the Parrot (27), or DJI (28) low-level controllers, which are mostly treated as blackboxes. This, together with the proprietary nature of the DJI systems, limits control over the low-level flight characteristics, which not only limits interpretability of results but also negatively affects agility. Full control over the complete pipeline is necessary to truly understand aerodynamic and high-frequency effects, model and control them, and exploit the platform to its full potential.

Apart from platforms mainly developed by research laboratories, several quadrotor designs are proposed by industry [Skydio (29), DJI (28), and Parrot (27)] and open-source projects [PX4 (26), Paparazzi (21), and Crazyflie (30)]. Although Skydio (29) and DJI (28) both develop platforms featuring a high level of autonomy, they do not support interfacing with custom code and therefore are of limited value for research and development purposes. Parrot (27) provides a set of quadrotor platforms tailored for inspection and surveillance tasks that are accompanied by limited software development kits that allow researchers to program custom flight missions. In contrast, PX4 (26) provides an entire ecosystem of open-source software and hardware as well as simulation. Although these features are extremely valuable especially for low-speed flight, both cross-platform hardware and software are not suited to push the quadrotor to agile maneuvers. Similarly, Paparazzi (21) is an open-source project for drones, which supports various hardware platforms. However, the supported autopilots have very limited onboard compute capability, rendering them unsuited for agile autonomous flight. The Crazyflie (30) is an extremely lightweight quadrotor platform with a takeoff weight of only 27 g. The minimal hardware setup leaves no margin for additional sensing or computation, prohibiting any nontrivial navigation task.

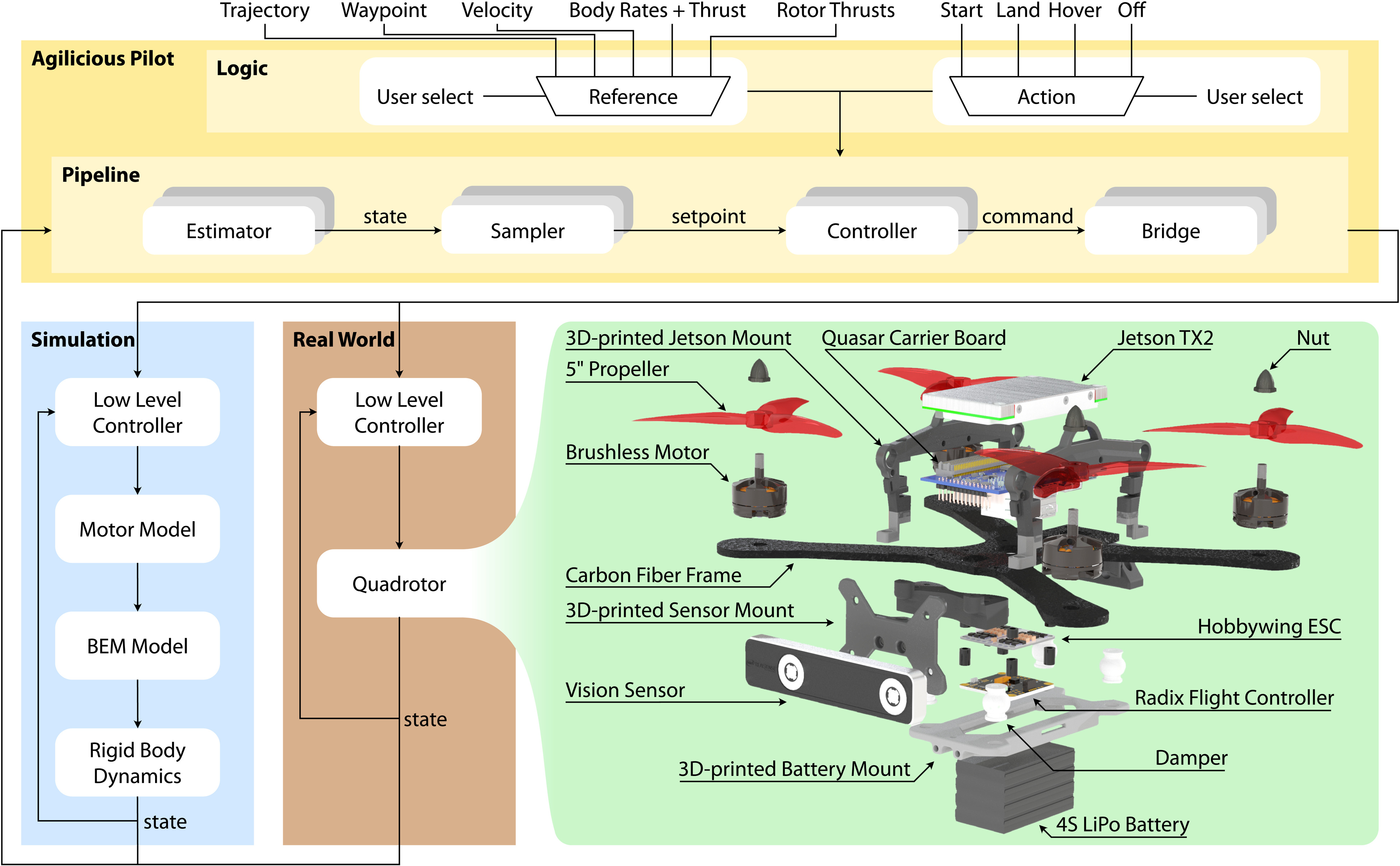

To address the requirements of agile flight, the shortcomings of existing works, and to enable the research community to progress fast toward agile flight, we present an open-source and open-hardware quadrotor platform for agile flight at more than 5g acceleration while providing substantial onboard compute and versatile software. The hardware design leverages recent advances in motor, battery, and frame design initiated by the first-person view (FPV) racing community. The design objectives resulted in creating a lightweight 750-g platform with maximal speed of 131 km/hour. This high-performance drone hardware is combined with a powerful onboard computer that features an integrated graphics processing unit (GPU), enabling complex neural network architectures to run at high frequency while concurrently running optimization-based control algorithms at low latency onboard the drone. The most important features of the Agilicious framework are summarized and compared with relevant research and industrial platforms in Fig. 2. A qualitative comparison of mutually contradicting onboard computational power and agility is also presented in Fig. 2.

Fig. 2. A comparison of different available consumer and research platforms with respect to available onboard compute capability and agility.

The platforms are compared on the basis of their openness to the community, support of simulation and onboard computation, used low-level controller, CPU power (reported according to publicly available benchmarks, www.cpubenchmark.net, and corresponding to the speed of solving a set of benchmark algorithms that represent a generic program), and the availability of onboard general-purpose GPU. The agility of the platforms is expressed in terms of TWR; however, we also report the maximal velocity as an agility indicator due to limited information about the commercial platforms. The PX4 (26) and the Paparazzi (21) are rather low-level autopilot frameworks without high-level computation capability, but they can be integrated in other high-level frameworks (4, 20). The open-source frameworks FLA (4), ASL (17), and MRS (20) have relatively large weight and low agility. The DJI (28), Skydio (29), and Parrot (27) are closed-source commercial products that are not intended for research purposes. The Crazyflie (30) does not allow for sufficient onboard compute or sensing, while the MIT (25) and GRASP (2) platforms are not available open source. Last, our proposed Agilicious framework provides agile flight performance, onboard GPU-accelerated compute capabilities, as well as open-source and open-hardware availability.

In codesign with the hardware, we complete the drone design with a modular and versatile software stack, called Agilicious. It provides a software architecture that allows easy transfer of algorithms from prototyping in simulation to real-world deployment in instrumented experiment setups, and even pure onboard-sensing applications in unknown and unstructured environments.

This modularity is key for fast development and high-quality research, because it allows users to quickly substitute existing components with new prototypes and enables all software components to be used in stand-alone testing applications, experiments, benchmarks, or even completely different applications.

The hardware and software presented in this work have been developed, tested, and refined throughout a series of research publications (3, 9, 10, 31–35). All these publications share the ambition to push autonomous drones to their physical limits. The experiments, performed in a diverse set of environments, demonstrate the versatility of Agilicious by deploying different estimation, control, and planning strategies on a single physical platform. The flexibility to easily combine and replace both hard- and software components in the flight stack while operating on a standardized platform facilitates testing new algorithms and accelerates research on fast autonomous flight.

RESULTS

Our experiments, conducted in simulation and in the real world, demonstrate that Agilicious can be used to perform cutting-edge research in the fields of agile quadrotor control, quadrotor trajectory planning, and learning-based quadrotor research. We evaluate the capabilities of the Agilicious software and hardware stack in a large set of experiments that investigate trajectory tracking performance, latency of the pipeline, and combinations of Agilicious with a set of commercially or openly available vision-based state estimators. Last, we present two demonstrators of recent research projects that build on Agilicious.

Trajectory tracking performance

In this section, we demonstrate the tracking performance of our platform by flying an aggressive time-optimal trajectory in a drone racing scenario. In addition, to benchmark our planning and control algorithms, we compete against a world-class drone racing pilot FPV pilot, reported in (10). As illustrated in Fig. 3, our drone racing track consists of seven gates that need to be traversed in a predefined order as fast as possible. The trajectory used for this evaluation reaches speeds of 60 km/hour and accelerations of 4g. Flying through gates at such high speed requires precise state estimates, which is still an open challenge using vision-based state estimators (36). For this reason, we conduct these experiments in an instrumented flight volume of 30 m × 30 m × 8 m (7200 m3), equipped with 36 VICON cameras that provide precise pose measurements at 400 Hz. However, even when provided with precise state estimation, accurately tracking such aggressive trajectories poses considerable challenges with respect to the controller design, which usually requires several iterations of algorithm development and substantial tuning effort. The proposed Agilicious flight stack allows us to easily design, test, and deploy different control methods by first verifying them in simulation and then fine-tuning them in the real world. The transition from simulation to real-world deployment requires no source code changes or adaptions, which reduces the risk of crashing expensive hardware and is one of the major features of Agilicious accelerating rapid prototyping. Figure 3 includes a simulated flight that shows similar characteristics and error statistics compared with the real-world flights described next.

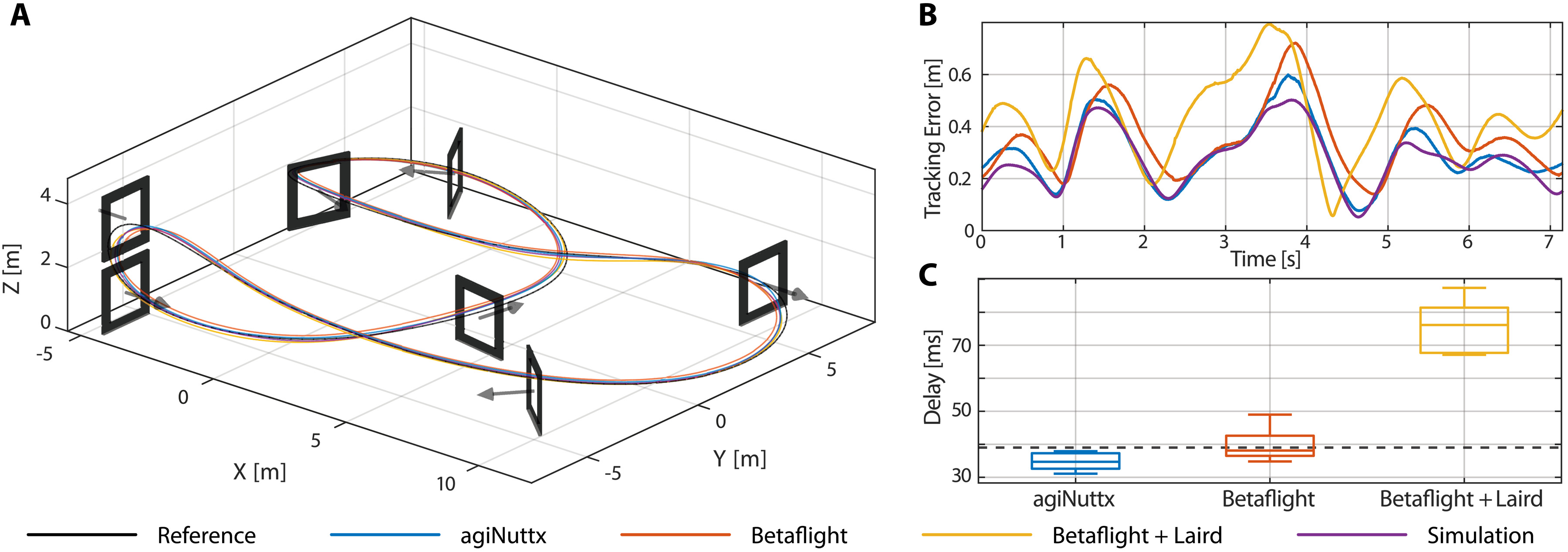

Fig. 3. An agile trajectory with speeds up to 60 km/hour and an acceleration of 4g, executed in an indoor instrumented flight volume.

We compare multiple different drone configurations, including our own low-level flight controller software agiNuttx, an off-the-shelf BetaFlight controller, the BetaFlight controller together with offboard computing and remote control through a Laird wireless transmitter, and our included simulation. (A) An overview of the flown trajectory. (B) The tracking errors along a single lap over all three configurations. Our provided agiNuttx achieves the best tracking performance, followed by BetaFlight combined with onboard computation. In contrast, offloading computation from the drone and controlling it remotely significantly affects performance. This is due to the massively increased latency, depicted in (C), where, for reference, the motor time constant of 39.1 ms is marked as a dashed line (- - -). (C) shows Tukey box plots with the horizontal lines marking the median, the boxes marking the quartiles, and the whiskers marking minimum/maximum. In addition, in (B), it is visible that the simulation exhibits very similar error characteristics because of our accurate aerodynamic modeling.

OPEN IN VIEWER

We evaluate three different system and control approaches including onboard computation with an off-the-shelf BetaFlight (37) flight controller, our custom open-source agiNuttx controller, and an offboard control scenario. These three system configurations represent various use cases of Agilicious, such as running state-of-the-art single-rotor control onboard the drone using our agiNuttx described in the “Flight hardware” section, or simple remote control by executing Agilicious on a desktop computer and forwarding the commands to the drone. All configurations use the motion capture state estimate and our single-rotor model-predictive control (MPC) described in Materials and Methods (38) as high-level controller. We use the single-rotor thrust formulation to correctly account for actuation limits, but use body rates and collective thrust as command modality. The first configuration runs completely onboard the drone with an additional low-level controller in the form of incremental nonlinear dynamic inversion (INDI) as described in Materials and Methods (38). It uses the MPC’s output to compute refined single-rotor thrust commands using INDI, to reduce the sensitivity to model inaccuracies. These single-rotor commands are executed using our agiNuttx flight controller with closed-loop motor speed tracking.

The second configuration also runs onboard and directly forwards the body rate and collective thrust command from the MPC to a BetaFlight (37) controller. This represents the most simplistic system that does not require flashing the flight controller and is compatible with a wide range of readily available off-the-shelf hobbyist drone components. However, in this configuration, the user does not get any IMU or motor speed feedback, because those are not streamed by BetaFlight.

The third configuration is equal to the second configuration with the difference that the Agilicious flight stack runs offboard on a desktop or laptop computer. The body rate and collective thrust commands from the MPC are streamed to the drone using a serial wireless link implemented through LAIRD (39). This configuration allows to run computationally demanding algorithms, such as GPU-accelerated neural networks, with minimal modifications. However, because of the additional wireless command transmission, there is a higher latency that can potentially degrade the control performance.

Last, the Agilicious simulation is executed using the same setup as the first configuration. It uses accurate models for the quadrotor and motor dynamics, as well as a BEM aerodynamic model as described in (40).

Figure 3 depicts the results of these trajectory tracking experiments. Our first proposed configuration (i.e., with onboard computation and the custom agiNuttx flight controller) achieves the best overall tracking performance with the lowest average positional root mean square error (RMSE) of just 0.322 m at up to 60 km/hour and 4g. Next up is the second configuration with BetaFlight, still achieving less than 0.385-m average positional RMSE. Last, the third configuration with offboard control exhibits higher latency, leading to an increased average positional RMSE of 0.474 m. As can be seen, our simulation closely matches the performance observed in real world in the first configuration. The simulated tracking results in slightly lower errors, 0.320 RMSE, because even the state-of-the-art aerodynamic models (40) fail to reproduce the highly nonlinear and chaotic real aerodynamics. This simulation accuracy allows a seamless transition from simulation prototyping to real-world verification and is one of the prominent advantages of Agilicious.

Additional experiments motivating the choice of MPC as outer-loop controller and its combination with INDI can be found in (38), details on the planning of the time-optimal reference trajectory are elaborated on in (10), and additional extensions to the provided MPC for fast flight are in (41) and for rotor failure MPC in (42). These related publications also showcase performance at even higher speeds of up to 70 km/hour and accelerations reaching 5g. The following section gives further insights into the latency of the three configurations tested here, including on- and offboard control architecture, as well as BetaFlight and agiNuttx flight controllers.

Control latency

All real systems with finite resources suffer from communication and computation delays, whereas dynamic systems and even filters can introduce additional latency and bandwidth limitations. Analyzing and minimizing these delays is fundamental for the performance in any control task, especially when tracking agile and fast trajectories in the presence of model mismatch, disturbances, and actuator limitations. In this section, we conduct a series of experiments that aim to analyze and determine the control latency, from command to actuation, of the proposed architecture for the three different choices of low-level configurations: our agiNuttx, BetaFlight, and BetaFlight with offboard control.

For this experiment, the quadrotor has been mounted on a load cell [ATI Mini 40 SI-20-1; (43)] measuring the force and moment acting on the platform. To measure the latency, a collective thrust step command of 12 N is sent to the corresponding low-level controller while measuring the exerted force on the drone. These force sensor measurements are time-synchronized with the collective thrust commands and fitted through a first-order system representing motor dynamics. The measured delays are reported in Fig. 3C as the difference between the time at which the high level controller sends the collective thrust command and the time at which the measured force effectively starts changing. The results show that both agiNuttx has the lowest latency at 35 ms, with BetaFlight slightly slower at 40.15 ms. A large delay can be observed when using offboard control and sending the commands via Laird connection to the drone, in which case the latency rises to more than 75. The effect of these latencies is also reflected in the tracking error in Fig. 3 (A and B). To put the measured delays into perspective, the motor’s time constant of 39.1 ms, which dictates the actuator bandwidth limitations, is indicated in Fig. 3C. Last, the “Hardware in the loop simulation” section gives some insight into the latencies introduced when using Agilicious together with Flightmare (44) in a hardware-in-the-loop setup.

Visual-inertial state estimation

Deploying agile quadrotors outside of instrumented environments requires access to onboard state estimation. There exist many different approaches including GPS, lidar, and vision-based solutions. However, for size- and weight-constrained aerial vehicles, visual-inertial odometry has proven to be the go-to solution because of the sensors’ complementary measurement modalities, low cost, mechanical simplicity, and reusability for other purposes, such as depth estimation for obstacle avoidance.

The Agilicious platform provides a versatile sensor mount that is compatible with different sensors and can be easily adapted to fit custom sensor setups. In this work, two different visual-inertial odometry (VIO) solutions are evaluated: (i) the proprietary, off-the-shelf Intel RealSense T265 and (ii) a simple camera together with the onboard flight controller IMU and an open-source VIO pipeline in the form of SVO Pro (45, 46) with its sliding window backend. Whereas the RealSense T265 performs all computation on-chip and directly provides a state estimate via USB3.0, the alternative VIO solution uses the Jetson TX2 to run the VIO software and allows researchers to interface and modify the state estimation software. Specifically, for sensor setup (ii), a single Sony IMX-287 camera at 30 Hz with a 165° diagonal field of view is used, combined with the IMU measurements of the flight controller at 500 Hz, calibrated using the Kalibr toolbox (47).

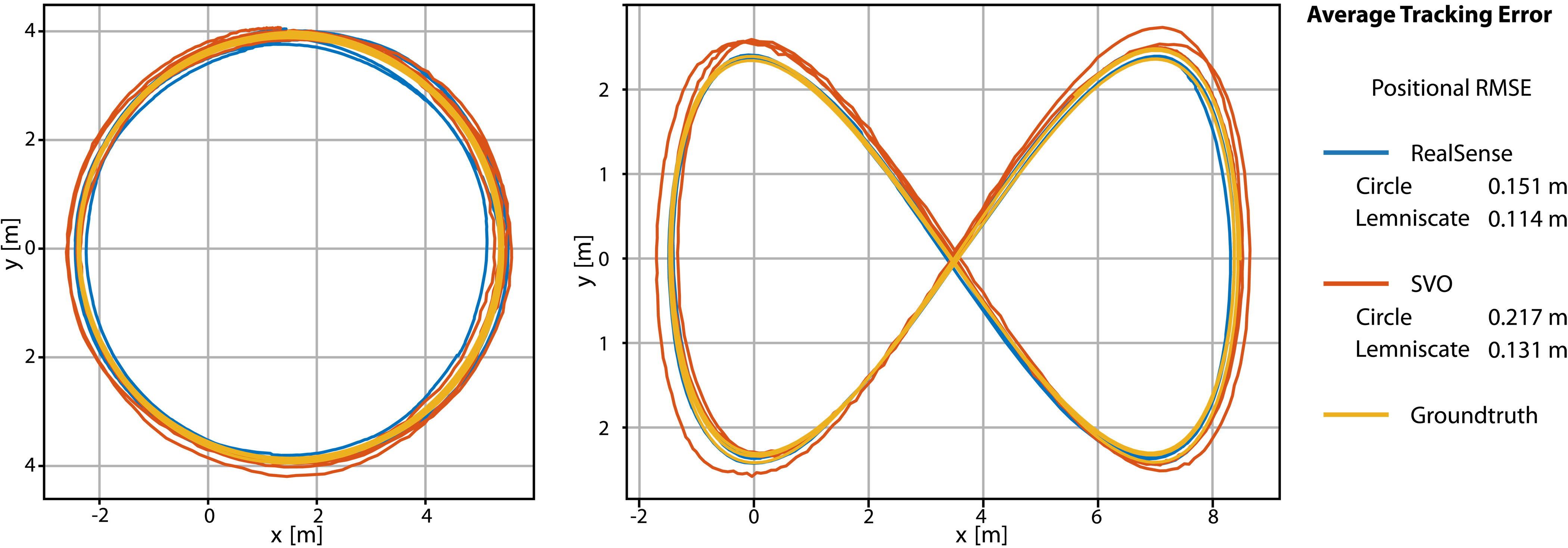

To verify their usability, a direct comparison of both VIO solutions with respect to ground truth is provided. Performance is evaluated on the basis of the estimation error (48) obtained on two trajectories flown with Agilicious. The flown trajectories consist of a circle trajectory with radius of 4 m at a speed of 5 m/s and a Lemniscate trajectory with an amplitude of 5 m at a speed of up to 7 m/s.

Figure 4 shows the performance of both VIO solutions in an xy overview of the trajectories together with their absolute tracking error (ATE) RMSE. Both approaches perform well on both trajectories, with the Intel RealSense achieving slightly better accuracy according to the ATE of 0.151 m on the circle and 0.114 m on the Lemniscate, compared with the monocular SVO approach with 0.217 and 0.131 m, respectively. This is expected because the Intel RealSense uses a stereo camera plus IMU setup and is a fully integrated solution, whereas sensor setup (ii) aims at minimal cost by only adding a single camera and otherwise exploiting the existing flight controller IMU and onboard compute resources.

Fig. 4. A comparison of two different VIO solutions.

The first solution consists of the Intel RealSense T265, an off-the-shelf sensor featuring a stereo camera, an IMU, and an integrated VIO pipeline running on the integrated compute hardware. The second solution consists of a monocular camera, the IMU of the onboard flight controller, and SVO (45). The estimates of both solutions are compared against motion capture ground truth using (48) on a circle (left) and a Lemniscate trajectory (right), flown using Agilicious in an indoor environment. Both systems show accurate tracking performance and could be used as cost-effective drop-in replacements for motion capture systems and enable deployment in the wild. Although the Intel RealSense T265 is a convenient off-the-shelf option, using other cameras in combination with the onboard flight controller IMU and an open-source or custom VIO pipeline enables tailored solutions and research-oriented data access.

OPEN IN VIEWER

However, at the timing of writing this manuscript, the Intel RealSense T265 is being discontinued. Other possible solutions include camera sensors—such as SevenSense (49), MYNT EYE (50), or MatrixVision (51)—and other stand-alone cameras, combined with software frameworks like ArtiSense (52) or SlamCore (53) or open-source frameworks like VINSmono (54), OpenVINS (55), or SVO Pro (45, 46) [evaluated in (56)]. Furthermore, there are other fully integrated alternatives to the Intel RealSense (57), including the Roboception (58) and the ModalAI Voxl CAM (59).

Demonstrators

The Agilicious software and hardware stack is intended as a flexible research platform. To illustrate its broad applicability, this section showcases a set of research projects that have been enabled through Agilicious. Specifically, we demonstrate the performance of our platform in two different experimental setups covering hardware-in-the-loop (HIL) simulation and autonomous flight in the wild using only onboard sensing.

Hardware-in-the-loop simulation

Developing vision-based perception and navigation algorithms for agile flight not only is slow and time-consuming, due to the large amount of data required to train and test perception algorithms in diverse settings, but also progressively becomes less safe and more expensive because more aggressive flights can lead to devastating crashes. This motivates the Agilicious framework to support hardware-in-the-loop simulation, which consists of flying a physical quadrotor in a motion capture system while observing virtual photorealistic environments, as previously shown in (14). The key advantage of hardware-in-the-loop simulation over classical synthetic experiments (60) is the usage of real-world dynamics and proprioceptive sensors, instead of idealized virtual devices, combined with the ability to simulate arbitrarily sparse or dense environments without the risk of crashing into real obstacles.

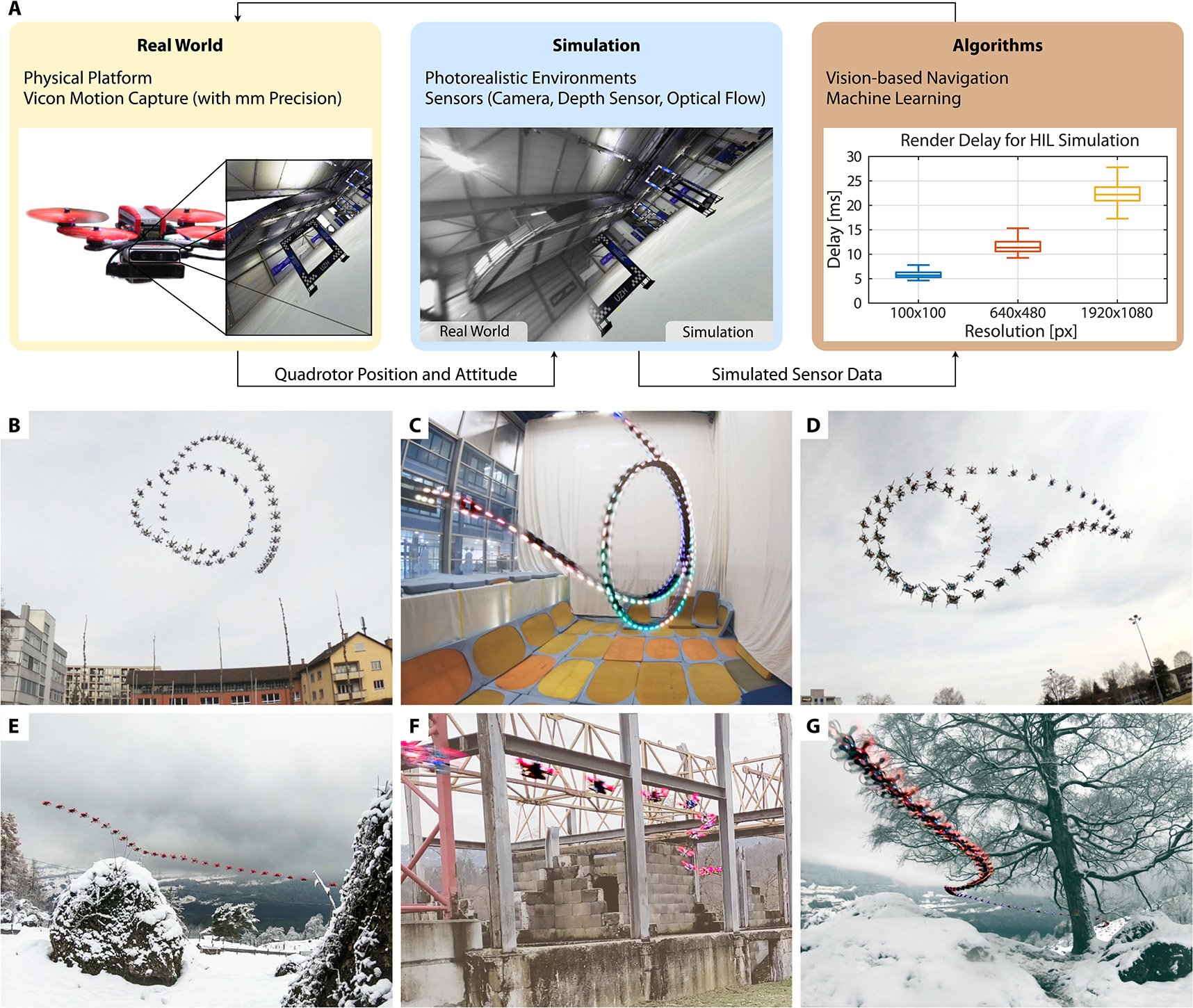

The simulation of complex three-dimensional (3D) environments and realistic exteroceptive sensors is achieved using our high-fidelity quadrotor simulator (44) built on Unity (61). The simulator can offer a rich and configurable sensor suite, including RGB (red-green-blue) cameras, depth sensors, optical flow, and image segmentation, combined with variable sensor noise levels, motion blur, distortion, and diverse environmental factors such as wind and rain. The simulator achieves this by introducing only minimal delays (see Fig. 5A), ranging from 13 ms for 640 × 480 VGA (video graphics array) resolution to 22 ms for 1920 × 1080 full HD (high-definition) images, when rendered on an NVIDIA RTX 2080 GPU. Overall, the integration of our agile quadrotor platform and high-fidelity visual simulation provides an efficient framework for the rapid development of vision-based navigation systems in complex and unstructured environments.

Fig. 5. Illustrations of possible deployment scenarios of the Agilicious flight stack, including simulation, hardware-in-the-loop experimentation, and autonomous flight in outdoor environments.

(A) The hardware-in-the-loop simulation of Agilicious consists of a real quadrotor flying in a motion capture system combined with photorealistic simulation of complex 3D environments. Multiple sensors can be simulated with minimal delays while virtually flying in various simulated scenes. Such hardware-in-the-loop simulation offers a modular framework for prototyping robust vision-based algorithms safely, efficiently, and inexpensively. (B to G) The Agilicious platform is deployed in a diverse set of environments while only relying on onboard sensing and computation. (B to D) The quadrotor performs a set of acrobatic maneuvers using a learned control policy. (E to G) By leveraging zero-shot sim-to-real transfer, the quadrotor platform performs agile navigation through cluttered environments.

IMAGE CREDITS: COURTESY OF (B TO D) KAUFMANN ET AL. (9) AND (E TO G) LOQUERCIO ET AL. (32).

OPEN IN VIEWER

Vision-based agile flight with onboard sensing and computation

When a quadrotor can only rely on onboard vision and computation, perception needs to be effective, low-latency, and robust to disturbances. Violating this requirement may lead to crashes, especially during agile and fast maneuvers where latency has to be low and robust to perception disturbances and noise must be high. However, vision systems either exhibit reduced accuracy or completely fail at high speeds due to motion blur, large pixel displacements, and quick illumination changes (62). To overcome these challenges, vision-based navigation systems generally build upon two different paradigms. The first uses the traditional perception-planning-and-control pipeline, represented by stand-alone blocks that are executed in sequence and designed independently (2, 63–67). Works in the second category substitute either parts or the complete perception-planning-and-control pipeline with learning-based methods (9, 68–76).

The Agilicious flight stack supports both paradigms and has been used to compare traditional and learning-based methods on agile navigation tasks in unstructured and structured environments (see Fig. 5). Specifically, Agilicious facilitated quantitative analyses of approaches for autonomous acrobatic maneuvers (9) (Fig. 5, B to D) and high-speed obstacle avoidance in previously unknown environments (32) (Fig. 5, E to G). Both comparisons feature a rich set of approaches consisting of traditional planning and control (31, 64, 66) as well as learning-based methods (9, 32) with different input and output modalities. Because of its flexibility, Agilicious enables an objective comparison of these approaches on a unified control stack, without biasing results due to different low-level control strategies.

DISCUSSION

The presented Agilicious framework advances the published state of the art in autonomous quadrotor platform research. It offers advanced computing capabilities combined with a powerful open-source and open-hardware quadrotor platform, opening the door for research on the next generation of autonomous robots. We see three main axes for future research based on our work.

First, we hypothesize that future flying robots will be smaller, lighter, cheaper, and consuming less power than what is possible today, increasing battery life, crash resilience, as well as TWR and TIR (23). This miniaturization is evident in state-of-the-art research toward direct hardware implementations of modern algorithms in the form of application-specific integrated circuits (ASICs), such as the Navion (77), the Movidius (78), or the PULP processor (79, 80). These highly specialized in-silicon implementations are typically magnitudes smaller and more efficient than general compute units. Their success is rooted in the specific structure many algorithmic problems exhibit, such as the parallel nature of image data or the factor graph representations used in estimation, planning, and control algorithms, like simultaneous localization and mapping (SLAM), MPC, and neural network inference.

Second, the presented framework was mainly demonstrated with fixed-shape quadrotors. This is an advantage because the platform is easier to model and control and less susceptible to hardware failures. Nevertheless, platforms with a dynamic morphology are by design more adaptable to the environment and potentially more power efficient (81–84). For example, to increase flight time, a quadrotor might transform to a fixed-wing aircraft (85). Because of its flexibility, Agilicious is the ideal tool for the future development of morphable and soft aerial systems.

Last, vision-based agile flight is still in the early stages and has not yet reached the performance of professional human pilots. The main challenges lie in handling complex aerodynamics, e.g., transient torques or rotor inflow, low-latency perception and state estimation, and recovery from failures at high speeds. In the last few years, considerable progress has been made by leveraging data-driven algorithms (9, 32, 40, 86) and unconventional sensors, such as event-based cameras (33, 87), that provide a high dynamic range, low latency, and low battery consumption (88). A major opportunity for future work is to complement the existing capabilities of Agilicious with unconventional compute devices such as the Intel Loihi (89–91) or SynSense Dynap (92) neuromorphic processing architecture, which are specifically designed to operate in an event-driven compute scheme. Because of the modular nature of Agilicious, individual software components can be replaced by these unconventional computing architectures, supporting rapid iteration and testing.

In summary, Agilicious offers a unique quadrotor test bed to accelerate current and future research on fast autonomous flight. Its versatility in both hardware and software allows deployment in a wide variety of tasks, ranging from exploration or search and rescue missions to acrobatic flight. Furthermore, the modularity of the hardware setup allows integrating unconventional sensors or even alternative compute hardware, enabling to test such hardware on an autonomous agile vehicle. By open-sourcing Agilicious, we provide the research and industrial community access to a highly agile, versatile, and extendable quadrotor platform.

MATERIALS AND METHODS

Designing a versatile and agile quadrotor research platform requires codesigning its hardware and software while carefully trading off competing design objectives such as onboard computing capacity and platform agility. In the following, the design choices that resulted in the flight hardware, compute hardware, and software design of Agilicious (see Fig. 1) are explained in detail.

Compute hardware

To exploit the full potential of highly unstable quadrotor dynamics, a high-frequency low-latency controller is needed. Both of these requirements are difficult to meet with general-purpose operating systems, which typically come without any real-time execution guarantees. Therefore, we deploy a low-level controller with limited compute capabilities but reliable real-time performance, which stabilizes high-bandwidth dynamics, such as the motor speeds or the vehicle’s body rate. This allows complementing the system with a general-purpose high-level compute unit that can run Linux for versatile software deployment, with relaxed real-time requirements.

High-level compute board

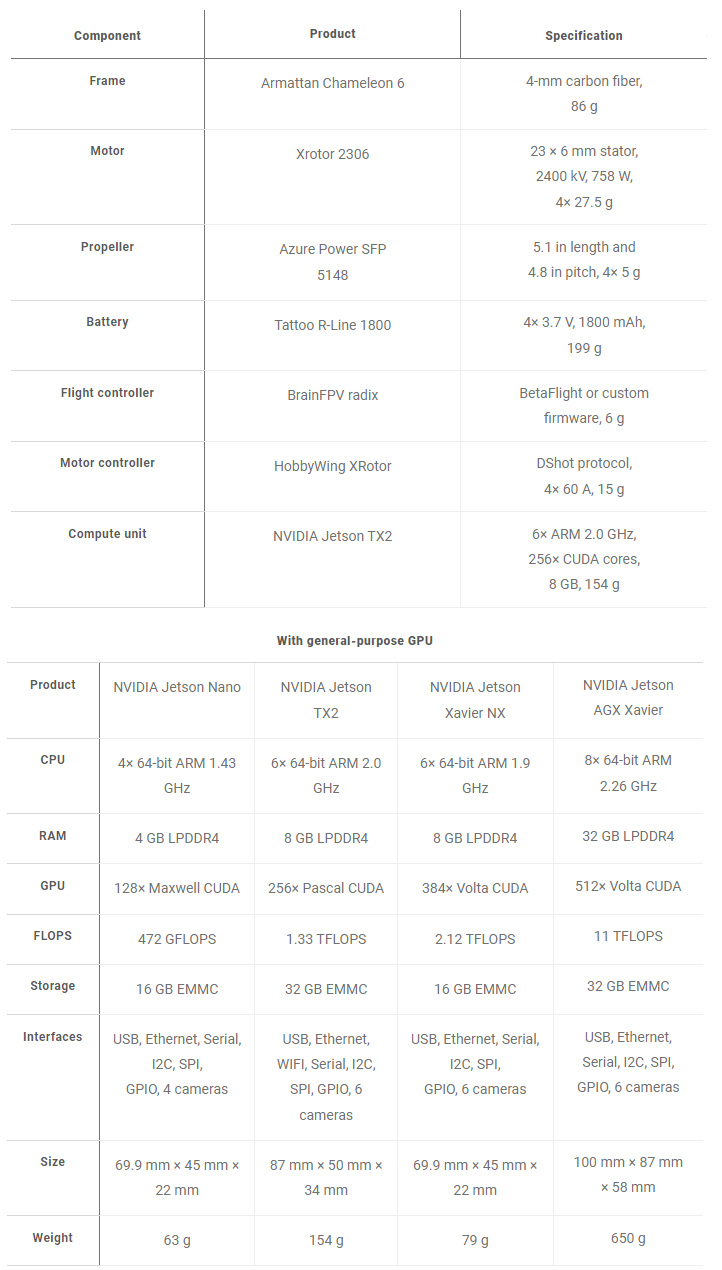

The high level of the system architecture provides all the necessary compute performance to run the full flight stack, including estimation, planning, optimization-based control, neural network inference, or other demanding experimental applications. Therefore, the main goal is to provide general-purpose computing power while complying with the strict size and weight limits. We evaluate a multitude of different compute modules made from system-on-a-chip solutions because they allow inherently small footprints. An overview is shown in Table 1. We exclude the evaluation of two popular contenders: (i) the Intel NUC platform, because it provides neither any size and weight advantage over the Jetson Xavier AGX nor a general-purpose GPU, and (ii) the Raspberry Pi compute modules, because they do not offer any compute advantages over the Odroid and UpBoard and no size and weight advantage over the NanoPi product family.

Table 1. Overview of compute hardware commonly used on autonomous flying vehicles.

Because of the emerging trend of deploying learning-based methods onboard, hardware solutions are grouped according to the presence of a general-purpose GPU, enabling real-time inference.

OPEN IN VIEWER

Because we target general flight applications, fast prototyping, and experimentation, it is important to support a wide variety of software, which is why we chose a Linux-based system. TensorFlow (93) and PyTorch (94) are some of the most prominent frameworks with hardware-accelerated neural network inference. Both of them support accelerated inference on the NVIDIA CUDA general-purpose GPU architecture, which renders NVIDIA products favorable, because other products have no or poorly supported accelerators. Therefore, four valid options remain, listed in the second row of Table 1. Although the Jetson Xavier AGX is beyond our size and weight goals, the Jetson Nano provides no advantage over the Xavier NX, rendering both the Jetson TX2 and Xavier NX viable solutions. Because these two CUDA-enabled compute modules require breakout boards to connect to peripherals, our first choice is the TX2 due to the better availability and diversity of such adapter boards and their smaller footprint. For the breakout board, we recommend the ConnectTech Quasar (95), providing multiple USB ports, Ethernet, serial ports, and other interfaces for sensors and cameras.

Low-level flight controller

The low-level flight controller provides real-time low-latency interfacing and control. A simple and widespread option is the open-source BetaFlight (37) software that runs on many commercially available flight controllers, such as the Radix (96). However, BetaFlight is made for human-piloted drones and optimized for a good human flight feeling, but not for autonomous operation. Furthermore, although it uses high-speed IMU readings for the control loop, it only provides very limited sensor readings at only 10 Hz. Therefore, Agilicious provides its own low-level flight controller implementation called “agiNuttx,” reusing the same hardware as the BetaFlight controllers. This means that the wide variety of commercially available products can be bought and reflashed with agiNuttx to provide a low-level controller suited for autonomous agile flight.

In particular, we recommend using the BrainFPV Radix (96) controller to deploy our agiNuttx software. The agiNuttx is based on an open-source NuttX (97) real-time operating system, optimized to run on embedded microcontrollers such as the STM32F4 used in many BetaFlight products. Our agiNuttx implementation interfaces with the motors’ electronic speed controller (ESC) over the digital bidirectional DShot protocol, allowing not only to command the motors but also to receive individual rotor speed feedback. This feedback is provided to the high-level controller together with IMU, battery voltage, and flight mode information over a 1MBaud serial bus at 500 Hz. The agiNuttx also provides closed-loop motor speed control, body rate control, and measurement time synchronization, allowing estimation and control algorithms to take full advantage of the available hardware.

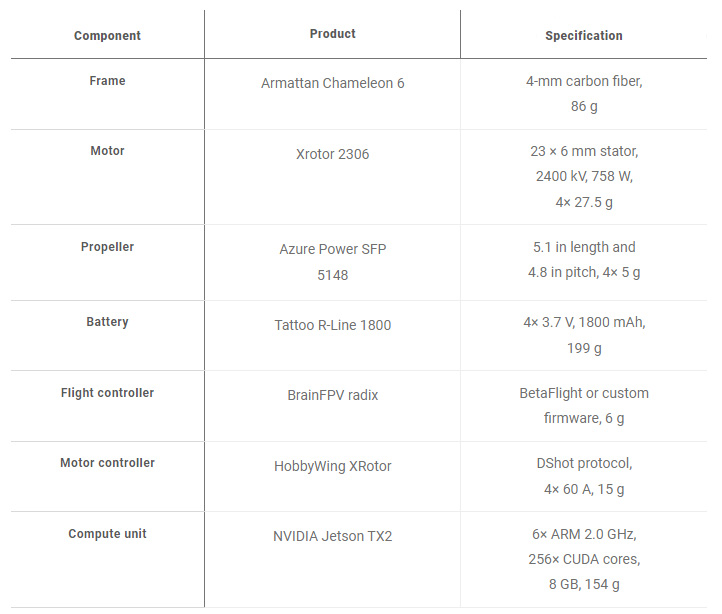

Flight hardware

To maximize the agility of the drone, it needs to be designed as lightweight and small as possible (22) while still being able to accommodate the Jetson TX2 compute unit. With this goal in mind, we provide a selection of cheap off-the-shelf drone components summarized in Table 2. The Armattan Chameleon 6 frame is used as a base because it is one of the smallest frames with ample space for the compute hardware. Being made out of carbon fiber, it is durable and lightweight. The other structural parts of the quadrotor are custom-designed plastic parts [polylactic acid (PLA) and thermoplastic polyurethane (TPU) material] produced using a 3D printer. Most components are made out of PLA, which is stiffer, and only parts that act as impact protectors or as predetermined breaking points are made out of TPU. For propulsion, a 5.1-inch three-bladed propeller is used in combination with a fast-spinning brushless DC motor rated at a high maximum power of 758 W. The chosen motor-propeller combination achieves a continuous static thrust of 4 × 9.5 N on the quadrotor and consumes about 400 W of power per motor. To match the high power demand of the motors, a lithium-polymer battery with 1800 mAh and a rating of 120 C is used. Therefore, the total peak current of 110 A is well within the 216-A limit of the battery. The motors are powered by an ESC in the form of the Hobbywing XRotor ESC, due to its compact form factor, its high continuous current rating (60 A per motor), and support of the DShot protocol supporting motor speed feedback).

Table 2. Overview of the components of the flight hardware design.OPEN IN VIEWER

Sensors

To navigate arbitrary uninstrumented environments, drones need means to measure their absolute or relative location and state. Because of the size and weight constraints of aerial vehicles and especially the direct impact of weight and inertia on the agility of the vehicle, VIO has proven to be the go-to solution for aerial navigation. The complementary sensing modality of cameras and IMUs and their low price and excellent availability, together with the depth-sensing capabilities of stereo camera configurations, allow for a simple, compact, and complete perception setup. Furthermore, our agiNuttx flight controller already provides high-rate filtered inertial measurements and can be combined with any off-the-shelf camera (49–51) and open-source (45, 46, 54, 55) or commercial (52, 53) software to implement a VIO pipeline. In addition, there exist multiple fully integrated products providing out-of-the-box VIO solutions, such as the Intel RealSense (57), the Roboception rc_visard (58), and the ModalAI Voxl CAM (59).

The Agilicious flight stack software

To exploit the full potential of our platform and enable fast prototyping, we provide the Agilicious flight stack as an open-source software package. The main development goals for Agilicious are aligned with our overall design goals: high versatility and modularity, low latency for agile flight, and transferability between simulation and multiple real-world platforms. These goals are met by splitting the software stack into two parts.

The core library, called “agilib,” is built with minimal dependencies but provides all functionality needed for agile flight, implemented as individual modules (illustrated in Fig. 1). It can be deployed on a large range of computing platforms, from lightweight low-power devices to parallel neural network training farms built on heterogeneous server architectures. This is enabled by avoiding dependencies on other software components that could introduce compatibility issues and rely only on the core C++-17 standard and the Eigen library for linear algebra. In addition, agilib includes a stand-alone set of unit tests and benchmarks that can be run independently, with minimal dependencies, and in a self-contained manner.

To provide compatibility to existing systems and software, the second component is a ROS wrapper, called “agiros,” which enables networked communication and data logging, provides a simple graphical user interface (GUI) to command the vehicle, and allows for integration with other software components. This abstraction between “agiros” and the core library “agilib” allows a more flexible deployment on systems or in environments where ROS is not available or not needed or communication overhead must be avoided. On the other hand, the ROS-enabled Agilicious provides versatility and modularity due to a vast number of open-source ROS packages provided by the research community.

For flexible and fast development, “agilib” uses modular software components unified in an umbrella structure called “pipeline” and orchestrated by a control logic, called “pilot.” The modules consist of an “estimator,” “sampler,” “controllers,” and a “bridge,” all working together to track a so-called “reference.” These modules are executed in sequential order (illustrated in Fig. 1) within a forward pass of the pipeline, corresponding to one control cycle. However, each module can spawn its individual threads to perform parallel tasks, e.g., asynchronous sensor data processing. Agilicious provides a collection of state-of-the-art implementations for each module inherited from base classes, allowing to create new implementations outside of the core library and linking them into the pilot at runtime. Moreover, Agilicious not only is capable of controlling a drone when running onboard the vehicle but also can run offboard on computationally more capable hardware and send commands to the drone over low-latency wireless serial interfaces.

Last, the core library is completed by a physics simulator. Although this might seem redundant due to the vast variety of simulation pipelines available (44, 60, 98), it allows the use of high-fidelity models [e.g., BEM (40) for aerodynamics], evaluates software prototypes without having to interface with other frameworks, avoids dependencies, and enables even simulation-based continuous integration testing that can run on literally any platform. The pilot, software modules, and simulator are all described in the following sections.

Pilot

The pilot contains the main logic needed for flight operation, handling of the individual modules, and interfaces to manage references and task commands. In its core, it loads and configures the software modules according to YAML (yet another markup language) (99) parameter files, runs the control loop, and provides simplified user interfaces to manage flight tasks, such as position and velocity control or trajectory tracking. For all state descriptions, we use a right-handed coordinate system located in the center of gravity, with the Bez pointing in body-relative upward thrust direction and Bex pointing along with the drone’s forward direction. Motion is represented with respect to an inertial world frame, with Iez pointing against the gravity direction, where translational derivatives (e.g., velocity) are expressed in the world frame and rotational derivatives (e.g., body rate) are expressed in the body frame.

Pipeline

The pipeline is a distinct configuration of the sequentially processed modules. These pipeline configurations can be switched at runtime by the pilot or the user, allowing switching to backup configurations in an emergency or quickly alternating between different prototyping configurations.

Estimator

The first module in the pipeline is the estimator, which provides a time-stamped state estimate for the subsequent software modules in the control cycle. A state estimate x = [p, q, v, w, a, τ, j, s, bω, ba, fd, f] represents position p, orientation unit quaternion q, velocity v, body rate ω, linear a and angular τ accelerations, jerk j, snap s, gyroscope and accelerometer bias bω and ba, and desired and actual single-rotor thrusts fd and f. Agilicious provides a feed-through estimator to include external estimates or ground truth from a simulation, as well as two extended Kalman filters, one with IMU filtering and one using the IMU as propagation model. These estimators can easily be replaced or extended to work with additional measurement sources, such as GPS or altimeters, and other estimation systems, or even implement complex localization pipelines such as visual-inertial odometry.

Sampler

For trajectory tracking using a state estimate from the aforementioned estimator, the controller module needs to be provided with a subset of points of the trajectory that encode the desired progress along it, provided by the sampler. Agilicious implements two types of samplers: a time-based sampling scheme that computes progress along the trajectory based on the time since trajectory start and a position-based sampling scheme that selects trajectory progress based on the current position of the platform, trading off temporally accurate tracking for higher robustness and lower positional tracking error.

Controller

To control the vehicle along the sampled reference setpoints, a multitude of controllers are available, which provide the closed-loop commands for the low-level controller. We provide a state-of-the-art MPC that uses the full nonlinear model of the platform and that allows tracking highly agile references using single-rotor thrust commands or body rate control. In addition, we include a cascaded geometric controller based on the quadrotor’s differential flatness (100). The pipeline can cascade two controllers, which even allows combining the aforementioned MPC (38) or geometric approaches with an intermediate controller for which we provide an L1 adaptive controller (101) and an incremental nonlinear dynamic inversion controller (38).

Bridge

A bridge serves as an interface to hardware or software by sending control commands to a low-level controller or other means of communication sinks. Low-level commands can be either single-rotor thrusts or body rates in combination with a collective thrust. Agilicious provides a set of bridges to communicate via commonly used protocols such as ROS, SBUS, and serial. Whereas the ROS bridge can be used to easily integrate Agilicious in an existing software stack that relies on ROS, the SBUS protocol is a widely used standard in the FPV community and therefore allows Agilicious to interface with off-the-shelf flight controllers such as BetaFlight (37). For simple simulation, there is a specific bridge to interface with the popular RotorS (60) simulator, which is, however, less accurate than our own simulation described in the “Simulation” section. Because Agilicious is written in a general abstract way, it runs on onboard compute modules and offboard, for which case we provide a bridge to interface with the LAIRD (39) wireless serial interface. Last, Agilicious also provides a bridge to communicate to the custom low-level controller described in the “Low-level flight controller” section. This provides the advantage of gaining access to closed-loop single-rotor speed control, high-frequency IMU, rotor speed, and voltage measurements at 500 Hz, all provided to the user through the bridge.

References

References are used in conjunction with a controller to encode the desired flight path of a quadrotor. In Agilicious, a reference is fed to the sampler, which generates a receding horizon vector of setpoints that are then passed to the controller. The software stack implements a set of reference types, consisting of Hover, Velocity, Polynomial, and Sampled. Whereas Hover references are uniquely defined by a reference position and a yaw angle, a Velocity reference specifies a desired linear velocity with a yaw rate. By exploiting the differential flatness of the quadrotor platform, Polynomial references describe the position and yaw of the quadrotor as polynomial functions of time. Sampled references provide the most general reference representations. Agilicious provides interfaces to generate and receive such sampled references and also defines a message and file format to store references to a file. By defining such formats, a wide variety of trajectories can be generated, communicated, saved, and executed using Python or other languages. Last, to simplify the integration and deployment of other control approaches, Agilicious also exposes a command feedthrough, which allows taking direct control over the applied low-level commands. For safety, even when command feedthrough is used, Agilicious provides readily available back-up control that can take over on user request or on timeout.

Guard

To further support users in fast prototyping, Agilicious provides a so-called guard. This guard uses the quadrotor’s state estimate or an alternative estimate (e.g., from motion capture when flying with VIO prototypes) together with a user-defined spatial bounding box to detect unexpected deviations from the planned flight path. Further detection metrics can be implemented by the user. Upon violation of, e.g., the spatial bounding box, the guard can switch control to an alternative pipeline using a backup estimate and control configuration. This safety pipeline can, e.g., use a motion capture system and a simple geometric controller, whereas the main pipeline runs a VIO estimator, an MPC, reinforcement learning control strategies, or other software prototypes. By providing this measure of backup, Agilicious substantially reduces the risk of crashes when testing new algorithms and allows iterating over research prototypes faster.

Simulation

The Agilicious software stack includes a simulator that allows simulating quadrotor dynamics at various levels of fidelity to accelerate prototyping and testing. Specifically, Agilicious models motor dynamics and aerodynamics acting on the platform. To also incorporate the different, possibly off-the-shelf, low-level controllers that can be used on the quadrotors, the simulator can optionally simulate the behavior of low-level controllers. One simulator update, typically called at 1 Hz, includes a call to the simulated low-level controller, the motor model, the aerodynamics model, and the rigid body dynamics model in a sequential fashion. Each of these components is explained in the following.

Low-level controller and motor model

Simulated low-level controllers run at simulation frequency and convert collective thrust and body rate commands into individual motor speed commands. The usage of a simulated low-level controller is optional if the computed control commands are already in the form of individual rotor thrusts. In this case, the thrusts are mapped to motor speed commands and then directly fed to the simulated motor model. The motors are modeled as a first-order system with a time constant that can be identified on a thrust test stand.

Aerodynamics

The simulated aerodynamics model lift and drag produced by the rotors from the current ego-motion of the platform and the individual rotor speeds. Agilicious implements two rotor models: Quadratic and BEM. The Quadratic model implements a simple quadratic mapping from rotor rotational speed to produced thrust, as commonly done in quadrotor simulators (44, 60, 98). Although such a model does not account for effects imposed by the movement of a rotor through the air, it is highly efficient to compute. In contrast, the BEM model leverages blade element momentum theory (BEM) to account for the effects of varying relative airspeed on the rotor thrust. To further increase the fidelity of the simulation, a neural network predicting the residual forces and torques (e.g., unmodeled rotor to rotor interactions and turbulence) can be integrated into the aerodynamics model. For details regarding the BEM model and the neural network augmentation, we refer the reader to (40).

Rigid body dynamics

Provided with a model of the forces and torques acting on the platform predicted by the aerodynamics model, the system dynamics of the quadrotor are integrated using a fourth-order Runge-Kutta scheme with a step size of 1. Agilicious also implements different integrators such as explicit Euler or symplectic Euler.

Apart from providing its own state-of-the-art quadrotor simulator, Agilicious can also be interfaced with external simulators. Interfaces to the widely used RotorS quadrotor simulator (60) and Flightmare (44), including the HIL simulator, are already provided in the software stack.

Acknowledgments

Funding:

This work was supported by the National Centre of Competence in Research (NCCR) Robotics through the Swiss National Science Foundation (SNSF) and the European Union’s Horizon 2020 Research and Innovation Program under grant agreement no. 871479 (AERIAL-CORE) and the European Research Council (ERC) under grant agreement no. 864042 (AGILEFLIGHT).

Author contributions:

P.F. developed the Agilicious software concepts and architecture, contributed to the Agilicious implementation, helped with the experiments, and wrote the manuscript. E.K., A.R., R.P., S.S., and L.B. contributed to the Agilicious implementation, helped with the experiments, and wrote the manuscript. T.L. evaluated the hardware components, designed and built the Agilicious hardware, helped with the experiments, and wrote the manuscript. Y.S. contributed to the Agilicious implementation, helped with the experiments, and wrote the manuscript. A.L. and G.C. helped with the experiments and wrote the manuscript. D.S. provided funding, contributed to the design and analysis of the experiments, and revised the manuscript.

Competing interests:

The authors declare that they have no competing interests.

Data and materials availability:

The main purpose of this paper is to share our data and materials. Therefore, all materials, both software and hardware designs, are open-source accessible at https://agilicious.dev under the GPL v3.0 license.

PDF原文

Agilicious:Open-source and open-hardware agile quadrotor for vision-based flight.pdf

Agilicious:Open-source and open-hardware agile quadrotor for vision-based flight.pdf

版权声明:本文为原创文章,版权归donstudio所有,欢迎分享本文,转载请保留出处!